Deep Learning với Tensorflow Module 1 Phần 3 : Những khái niệm cơ bản - Thao tác với các biểu thức trong tensors

Finding patterns in tensors (numberical representation of data) requires manipulating them.

Again, when building models in TensorFlow, much of this pattern discovery is done for you.

Basic operations

You can perform many of the basic mathematical operations directly on tensors using Pyhton operators such as, +, -, *.

You can add values to a tensor using the addition operator

tensor = tf.constant([[10, 7], [3, 4]]) tensor + 10

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[20, 17], [13, 14]], dtype=int32)>

Since we used tf.constant(), the original tensor is unchanged (the addition gets done on a copy).

# Original tensor unchanged tensor

result :

<tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[10, 7], [ 3, 4]], dtype=int32)>

Other operators also work.

# Multiplication (known as element-wise multiplication) tensor * 10

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[100, 70], [ 30, 40]], dtype=int32)>

# Subtraction tensor - 10

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[ 0, -3], [-7, -6]], dtype=int32)>

You can also use the equivalent TensorFlow function. Using the TensorFlow function (where possible) has the advantage of being sped up later down the line when running as part of a TensorFlow graph.

Use the tensorflow function equivalent of the '*' (multiply) operator

tf.multiply(tensor, 10)

result

<tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[100, 70], [ 30, 40]], dtype=int32)>

However, the original tensor is still unchanged

tensor

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[10, 7], [ 3, 4]], dtype=int32)>

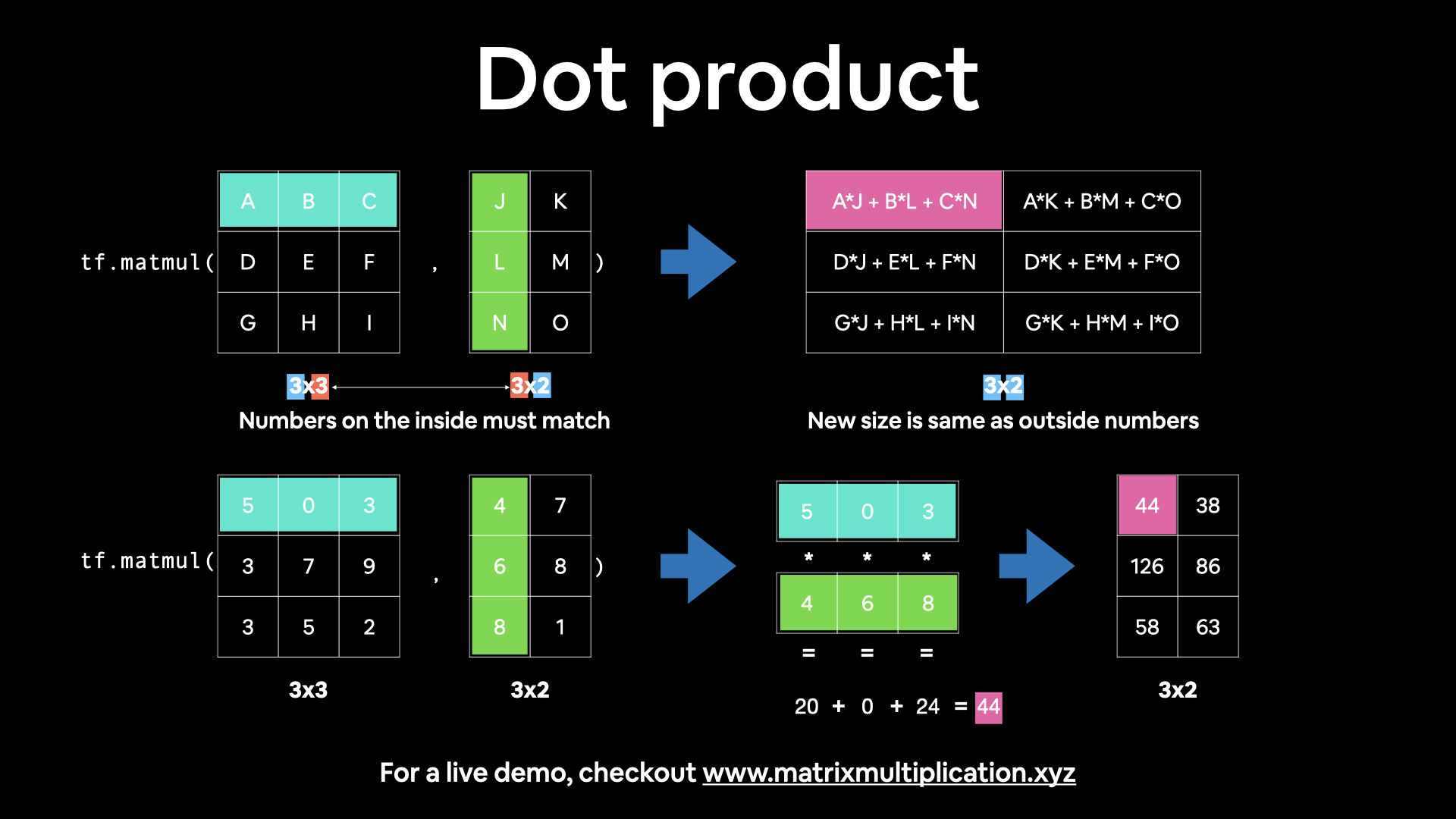

Matrix mutliplication

One of the most common operations in machine learning algorithms is matrix multiplication.

TensorFlow implements this matrix multiplication functionality in the tf.matmul() method.

The main two rules for matrix multiplication to remember are:

1. The inner dimensions must match:

* (3, 5) @ (3, 5) won't work

* (5, 3) @ (3, 5) will work

* (3, 5) @ (5, 3) will work

2. The resulting matrix has the shape of the outer dimensions:

* (5, 3) @ (3, 5) -> (5, 5)

* (3, 5) @ (5, 3) -> (3, 3)

🔑 Note: '

@' in Python is the symbol for matrix multiplication.

Matrix multiplication in TensorFlow

print(tensor) tf.matmul(tensor, tensor)

result

# [out] tf.Tensor( [[10 7] [ 3 4]], shape=(2, 2), dtype=int32) <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[121, 98], [ 42, 37]], dtype=int32)>

Matrix multiplication with Python operator '@'

tensor @ tensor

result

<tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[121, 98], [ 42, 37]], dtype=int32)>

Both of these examples work because our tensor variable is of shape (2, 2).

What if we created some tensors which had mismatched shapes?

# Create (3, 2) tensor X = tf.constant([[1, 2], [3, 4], [5, 6]]) # Create another (3, 2) tensor Y = tf.constant([[7, 8], [9, 10], [11, 12]]) X, Y

result

# [out] (<tf.Tensor: shape=(3, 2), dtype=int32, numpy= array([[1, 2], [3, 4], [5, 6]], dtype=int32)>, <tf.Tensor: shape=(3, 2), dtype=int32, numpy= array([[ 7, 8], [ 9, 10], [11, 12]], dtype=int32)>)

Try to matrix multiply them (will error)

X @ Y

result

# [out] InvalidArgumentError: Matrix size-incompatible: In[0]: [3,2], In[1]: [3,2] [Op:MatMul]

Trying to matrix multiply two tensors with the shape (3, 2) errors because the inner dimensions don't match.

We need to either:

* Reshape X to (2, 3) so it's (2, 3) @ (3, 2).

* Reshape Y to (3, 2) so it's (3, 2) @ (2, 3).

We can do this with either:

* tf.reshape() - allows us to reshape a tensor into a defined shape.

* tf.transpose() - switches the dimensions of a given tensor.

Let's try tf.reshape() first.

tf.reshape(Y, shape=(2, 3))

result

# [out] <tf.Tensor: shape=(2, 3), dtype=int32, numpy= array([[ 7, 8, 9], [10, 11, 12]], dtype=int32)>

Try matrix multiplication with reshaped Y

X @ tf.reshape(Y, shape=(2, 3))

result :

# [out] <tf.Tensor: shape=(3, 3), dtype=int32, numpy= array([[ 27, 30, 33], [ 61, 68, 75], [ 95, 106, 117]], dtype=int32)>

It worked, let's try the same with a reshaped X, except this time we'll use tf.transpose() and tf.matmul().

# Example of transpose (3, 2) -> (2, 3) tf.transpose(X)

result:

# [out] <tf.Tensor: shape=(2, 3), dtype=int32, numpy= array([[1, 3, 5], [2, 4, 6]], dtype=int32)>

Try matrix multiplication

tf.matmul(tf.transpose(X), Y)

result

<tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[ 89, 98], [116, 128]], dtype=int32)>

You can achieve the same result with parameters

tf.matmul(a=X, b=Y, transpose_a=True, transpose_b=False)

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[ 89, 98], [116, 128]], dtype=int32)>

Notice the difference in the resulting shapes when tranposing X or reshaping Y.

This is because of the 2nd rule mentioned above:

* (3, 2) @ (2, 3) -> (2, 2) done with tf.matmul(tf.transpose(X), Y)

* (2, 3) @ (3, 2) -> (3, 3) done with X @ tf.reshape(Y, shape=(2, 3))

This kind of data manipulation is a reminder: you'll spend a lot of your time in machine learning and working with neural networks reshaping data (in the form of tensors) to prepare it to be used with various operations (such as feeding it to a model).

The dot product

Multiplying matrices by eachother is also referred to as the dot product.

You can perform the tf.matmul() operation using tf.tensordot().

Perform the dot product on X and Y (requires X to be transposed)

tf.tensordot(tf.transpose(X), Y, axes=1)

result

# [out] <tf.Tensor: shape=(2, 2), dtype=int32, numpy= array([[ 89, 98], [116, 128]], dtype=int32)>

You might notice that although using both reshape and tranpose work, you get different results when using each.

Let's see an example, first with tf.transpose() then with tf.reshape().

Perform matrix multiplication between X and Y (transposed)

tf.matmul(X, tf.transpose(Y))

result

# [out] <tf.Tensor: shape=(3, 3), dtype=int32, numpy= array([[ 23, 29, 35], [ 53, 67, 81], [ 83, 105, 127]], dtype=int32)>

Perform matrix multiplication between X and Y (reshaped)

tf.matmul(X, tf.reshape(Y, (2, 3)))

result

<tf.Tensor: shape=(3, 3), dtype=int32, numpy= array([[ 27, 30, 33], [ 61, 68, 75], [ 95, 106, 117]], dtype=int32)>

They result in different values.

Which is strange because when dealing with Y (a (3x2) matrix), reshaping to (2, 3) and tranposing it result in the same shape.

Check shapes of Y, reshaped Y and tranposed Y

Y.shape, tf.reshape(Y, (2, 3)).shape, tf.transpose(Y).shape

result

# [out] (TensorShape([3, 2]), TensorShape([2, 3]), TensorShape([2, 3]))

But calling tf.reshape() and tf.transpose() on Y don't necessarily result in the same values.

# Check values of Y, reshape Y and tranposed Y print("Normal Y:") print(Y, "\n") # "\n" for newline print("Y reshaped to (2, 3):") print(tf.reshape(Y, (2, 3)), "\n") print("Y transposed:") print(tf.transpose(Y))

result

# [out] Normal Y: tf.Tensor( [[ 7 8] [ 9 10] [11 12]], shape=(3, 2), dtype=int32) Y reshaped to (2, 3): tf.Tensor( [[ 7 8 9] [10 11 12]], shape=(2, 3), dtype=int32) Y transposed: tf.Tensor( [[ 7 9 11] [ 8 10 12]], shape=(2, 3), dtype=int32)

As you can see, the outputs of tf.reshape() and tf.transpose() when called on Y, even though they have the same shape, are different.

This can be explained by the default behaviour of each method:

* tf.reshape() - change the shape of the given tensor (first) and then insert values in order they appear (in our case, 7, 8, 9, 10, 11, 12).

* tf.transpose() - swap the order of the axes, by default the last axis becomes the first, however the order can be changed using the perm parameter.

So which should you use?

Again, most of the time these operations (when they need to be run, such as during the training a neural network, will be implemented for you).

But generally, whenever performing a matrix multiplication and the shapes of two matrices don't line up, you will transpose (not reshape) one of them in order to line them up.

Matrix multiplication tidbits

- If we transposed

Y, it would be represented as $\mathbf{Y}^\mathsf{T}$ (note the capital T for tranpose). - Get an illustrative view of matrix multiplication by Math is Fun.

- Try a hands-on demo of matrix multiplcation: http://matrixmultiplication.xyz/ (shown below).

Changing the datatype of a tensor

Sometimes you'll want to alter the default datatype of your tensor.

This is common when you want to compute using less precision (e.g. 16-bit floating point numbers vs. 32-bit floating point numbers).

Computing with less precision is useful on devices with less computing capacity such as mobile devices (because the less bits, the less space the computations require).

You can change the datatype of a tensor using tf.cast().

# Create a new tensor with default datatype (float32) B = tf.constant([1.7, 7.4]) # Create a new tensor with default datatype (int32) C = tf.constant([1, 7]) B, C

result

# [out] (<tf.Tensor: shape=(2,), dtype=float32, numpy=array([1.7, 7.4], dtype=float32)>, <tf.Tensor: shape=(2,), dtype=int32, numpy=array([1, 7], dtype=int32)>)

Change from float32 to float16 (reduced precision)

B = tf.cast(B, dtype=tf.float16) B

result

# [out] <tf.Tensor: shape=(2,), dtype=float16, numpy=array([1.7, 7.4], dtype=float16)>

Change from int32 to float32

C = tf.cast(C, dtype=tf.float32) C

result

<tf.Tensor: shape=(2,), dtype=float32, numpy=array([1., 7.], dtype=float32)>

Getting the absolute value

Sometimes you'll want the absolute values (all values are positive) of elements in your tensors.

To do so, you can use tf.abs().

Create tensor with negative values

D = tf.constant([-7, -10]) D

result

# [out] <tf.Tensor: shape=(2,), dtype=int32, numpy=array([ -7, -10], dtype=int32)>

Get the absolute values

tf.abs(D)

result

# [out] <tf.Tensor: shape=(2,), dtype=int32, numpy=array([ 7, 10], dtype=int32)>

Finding the min, max, mean, sum (aggregation)

You can quickly aggregate (perform a calculation on a whole tensor) tensors to find things like the minimum value, maximum value, mean and sum of all the elements.

To do so, aggregation methods typically have the syntax reduce()_[action], such as:

* tf.reduce_min() - find the minimum value in a tensor.

* tf.reduce_max() - find the maximum value in a tensor (helpful for when you want to find the highest prediction probability).

* tf.reduce_mean() - find the mean of all elements in a tensor.

* tf.reduce_sum() - find the sum of all elements in a tensor.

*Note: typically, each of these is under the math module, e.g. tf.math.reduce_min() but you can use the alias tf.reduce_min().

Let's see them in action.

Create a tensor with 50 random values between 0 and 100

E = tf.constant(np.random.randint(low=0, high=100, size=50)) E

result

<tf.Tensor: shape=(50,), dtype=int64, numpy= array([74, 23, 69, 33, 93, 11, 26, 49, 64, 27, 45, 90, 88, 94, 52, 24, 12, 13, 55, 58, 83, 35, 83, 3, 9, 1, 1, 15, 2, 14, 36, 13, 91, 20, 27, 55, 55, 57, 64, 51, 48, 17, 58, 87, 23, 86, 61, 74, 27, 38])>

Find the minimum

tf.reduce_min(E)

result

# [out] <tf.Tensor: shape=(), dtype=int64, numpy=1>

Find the maximum

tf.reduce_max(E)

result :

<tf.Tensor: shape=(), dtype=int64, numpy=94>

Find the mean

tf.reduce_mean(E)

result

<tf.Tensor: shape=(), dtype=int64, numpy=44>

Find the sum

tf.reduce_sum(E)

result :

<tf.Tensor: shape=(), dtype=int64, numpy=2234>

You can also find the standard deviation (tf.reduce_std()) and variance (tf.reduce_variance()) of elements in a tensor using similar methods.

Finding the positional maximum and minimum

How about finding the position a tensor where the maximum value occurs?

This is helpful when you want to line up your labels (say ['Green', 'Blue', 'Red']) with your prediction probabilities tensor (e.g. [0.98, 0.01, 0.01]).

In this case, the predicted label (the one with the highest prediction probability) would be 'Green'.

You can do the same for the minimum (if required) with the following:

* tf.argmax() - find the position of the maximum element in a given tensor.

* tf.argmin() - find the position of the minimum element in a given tensor.

Create a tensor with 50 values between 0 and 1

F = tf.constant(np.random.random(50)) F

result

# [out] <tf.Tensor: shape=(50,), dtype=float64, numpy= array([0.07592178, 0.94322543, 0.92344447, 0.74110127, 0.08194169, 0.73354573, 0.48076312, 0.26615463, 0.03259512, 0.61108259, 0.48653824, 0.55564059, 0.32973151, 0.52283536, 0.07177982, 0.77835507, 0.99995949, 0.21620354, 0.33878906, 0.12543064, 0.38151196, 0.14105259, 0.33229439, 0.07220625, 0.83922614, 0.05093098, 0.00411336, 0.23188609, 0.4610022 , 0.69763888, 0.45159543, 0.74850182, 0.72545279, 0.1772494 , 0.45858496, 0.69012979, 0.01455465, 0.79085462, 0.07979816, 0.52151749, 0.41948966, 0.86794398, 0.24320341, 0.74226497, 0.69994342, 0.93347022, 0.392304 , 0.24266072, 0.48607792, 0.74761999])>

Find the maximum element position of F

tf.argmax(F)

result

# [out] <tf.Tensor: shape=(), dtype=int64, numpy=16>

Find the minimum element position of F

tf.argmin(F)

result

# [out] <tf.Tensor: shape=(), dtype=int64, numpy=26>

Find the maximum element position of F

print(f"The maximum value of F is at position: {tf.argmax(F).numpy()}") print(f"The maximum value of F is: {tf.reduce_max(F).numpy()}") print(f"Using tf.argmax() to index F, the maximum value of F is: {F[tf.argmax(F)].numpy()}") print(f"Are the two max values the same (they should be)? {F[tf.argmax(F)].numpy() == tf.reduce_max(F).numpy()}")

result

# [out] The maximum value of F is at position: 16 The maximum value of F is: 0.9999594897376615 Using tf.argmax() to index F, the maximum value of F is: 0.9999594897376615 Are the two max values the same (they should be)? True

Squeezing a tensor (removing all single dimensions)

If you need to remove single-dimensions from a tensor (dimensions with size 1), you can use tf.squeeze().

tf.squeeze()- remove all dimensions of 1 from a tensor.

Create a rank 5 (5 dimensions) tensor of 50 numbers between 0 and 100

G = tf.constant(np.random.randint(0, 100, 50), shape=(1, 1, 1, 1, 50)) G.shape, G.ndim

result :

# [out] (TensorShape([1, 1, 1, 1, 50]), 5)

Squeeze tensor G (remove all 1 dimensions)

G_squeezed = tf.squeeze(G) G_squeezed.shape, G_squeezed.ndim

result

# [out] (TensorShape([50]), 1)

One-hot encoding

If you have a tensor of indicies and would like to one-hot encode it, you can use tf.one_hot().

You should also specify the depth parameter (the level which you want to one-hot encode to).

# Create a list of indices some_list = [0, 1, 2, 3] # One hot encode them tf.one_hot(some_list, depth=4)

result

# [out] <tf.Tensor: shape=(4, 4), dtype=float32, numpy= array([[1., 0., 0., 0.], [0., 1., 0., 0.], [0., 0., 1., 0.], [0., 0., 0., 1.]], dtype=float32)>

You can also specify values for on_value and off_value instead of the default 0 and 1

# Specify custom values for on and off encoding tf.one_hot(some_list, depth=4, on_value="We're live!", off_value="Offline")

result

# [out] <tf.Tensor: shape=(4, 4), dtype=string, numpy= array([[b"We're live!", b'Offline', b'Offline', b'Offline'], [b'Offline', b"We're live!", b'Offline', b'Offline'], [b'Offline', b'Offline', b"We're live!", b'Offline'], [b'Offline', b'Offline', b'Offline', b"We're live!"]], dtype=object)>

Squaring, log, square root

Many other common mathematical operations you'd like to perform at some stage, probably exist.

Let's take a look at:

* tf.square() - get the square of every value in a tensor.

tf.sqrt() - get the squareroot of every value in a tensor (*note: the elements need to be floats or this will error).

* tf.math.log() - get the natural log of every value in a tensor (elements need to floats).

Create a new tensor

H = tf.constant(np.arange(1, 10)) H

result

# [out] <tf.Tensor: shape=(9,), dtype=int64, numpy=array([1, 2, 3, 4, 5, 6, 7, 8, 9])> Square it ```python tf.square(H)

result

# [out] <tf.Tensor: shape=(9,), dtype=int64, numpy=array([ 1, 4, 9, 16, 25, 36, 49, 64, 81])>

Change H to float32

H = tf.cast(H, dtype=tf.float32) H

result

# [out] <tf.Tensor: shape=(9,), dtype=float32, numpy=array([1., 2., 3., 4., 5., 6., 7., 8., 9.], dtype=float32)>

Find the square root. Remember, you need to cast this tensor to float type before square root, because it may occur error.

tf.sqrt(H)

result

<tf.Tensor: shape=(9,), dtype=float32, numpy= array([1. , 1.4142135, 1.7320508, 2. , 2.236068 , 2.4494898, 2.6457512, 2.828427 , 3. ], dtype=float32)>

Find the log (input also needs to be float)

tf.math.log(H)

result

# [out] <tf.Tensor: shape=(9,), dtype=float32, numpy= array([0. , 0.6931472, 1.0986123, 1.3862944, 1.609438 , 1.7917595, 1.9459102, 2.0794415, 2.1972246], dtype=float32)>

Manipulating tf.Variable tensors

Tensors created with tf.Variable() can be changed in place using methods such as:

.assign()- assign a different value to a particular index of a variable tensor.

.add_assign()- add to an existing value and reassign it at a particular index of a variable tensor.

Create a variable tensor

I = tf.Variable(np.arange(0, 5)) I

result

<tf.Variable 'Variable:0' shape=(5,) dtype=int64, numpy=array([0, 1, 2, 3, 4])>

Assign the final value a new value of 50

I.assign([0, 1, 2, 3, 50])

As a result, the change happens in place (the last value is now 50, not 4)

# [out] <tf.Variable 'UnreadVariable' shape=(5,) dtype=int64, numpy=array([ 0, 1, 2, 3, 50])>

Add 10 to every element in I

I.assign_add([10, 10, 10, 10, 10])

result

# [out] <tf.Variable 'UnreadVariable' shape=(5,) dtype=int64, numpy=array([10, 11, 12, 13, 60])>

Again, the change happens in place

I

result

# [out] <tf.Variable 'Variable:0' shape=(5,) dtype=int64, numpy=array([10, 11, 12, 13, 60])>